Introducing Synapse Data Engineering in Microsoft Fabric

See Arun Ulagaratchagan’s blog post to read the full Microsoft Fabric preview announcement.

Data engineering is playing an increasingly foundational role in every organization’s analytics journey. The amount of data that needs to be processed is growing faster than ever, ranging from tabular data to unstructured documents, images, IoT sensors and more. All this data needs to be ingested, processed at scale, and shared with the business. Data engineers need to tackle numerous challenges including data consolidation, security considerations as well as democratization of data, catering to different consumption needs. These processes are complex – data is fragmented across many sources, data sharing requires ETL jobs and synchronization, often to proprietary stores, security needs to be replicated multiple times, leading to inconsistencies. This results in friction and project roadblocks, hampering productivity and leading to frustration.

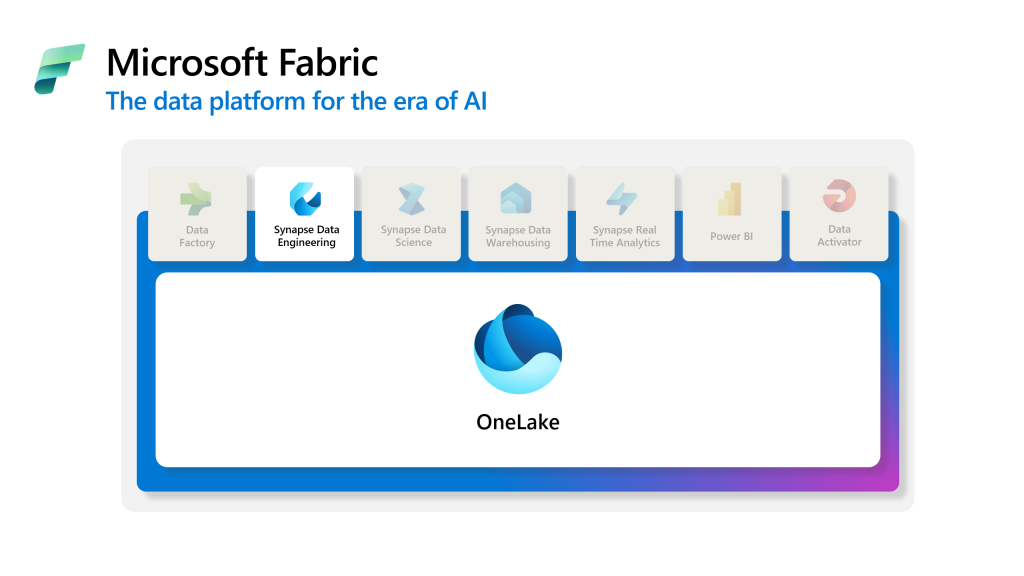

Today, we are excited to announce the preview of Synapse Data Engineering, one of the core experiences of Microsoft Fabric. Microsoft Fabric empowers teams of data professionals to seamlessly collaborate, end-to-end on their analytics projects, ranging from data integration to data warehousing, data science and business intelligence. With data engineering as a core experience in Fabric, data engineers will feel right at home, being able to leverage the power of Apache Spark to transform their data at scale and build out a robust lakehouse architecture.

What’s included in Synapse Data Engineering?

With Synapse Data Engineering, we aspire to streamline the process of working with your organizational data. Instead of wasting cycles on the ‘integration tax’ of wiring together a collection of products, worrying about spinning up and managing infrastructure and stitching together disparate data sources, we want data engineers to focus on the jobs to be done.

Here are some of the key Synapse Data Engineering experiences that are launching as part of Microsoft Fabric at Build:

Build a lakehouse for all your organizational data

The Synapse Data Engineering lakehouse combines the best of the data lake and warehouse, removing the friction of ingesting, transforming, and sharing organizational data, all in an open format. By making the lakehouse a first-class item in the workspace, we have made it really easy for any data engineer to create it and work with it.

Users can choose from various ways of bringing data into the lakehouse including dataflow & pipelines, and they can even use shortcuts to create virtual folders and tables without the data ever leaving their storage accounts. Ingested data comes by default in the Delta lake format, and tables are automatically created for users.

The lakehouse also streamlines the process of collaborating on top of the same data. Since all the data in Microsoft Fabric is automatically stored in the Delta format, different data professionals can easily work together. The lakehouse comes with a SQL endpoint that provides data warehousing capabilities, including the ability to run T-SQL queries, create views and define functions. Every lakehouse also comes with a semantic dataset, enabling BI users to build reports directly on top of lakehouse data. Power BI can connect to the lakehouse data using ‘Direct Lake’ mode meaning it can read the data in the lake, with no data movement and with great performance.

Runtime with great default performance & robust admin controls

We are excited to announce that the Synapse Data Engineering public preview is shipping with ‘Runtime 1.1’ which includes Spark 3.3.1, Delta 2.2 and Python 3.10. To remove friction in getting started, the Spark Runtime comes pre-wired to every Microsoft Fabric workspace.

In Microsoft Fabric, we strive to provide users with great, out of the box performance, with no tuning required, and Spark is no exception. There are a variety of optimizations built into the runtime to ensure data engineers always have a performant experience. These include Spark query optimizations like partition caching, but also Delta optimizations such as ‘V-order’. All Microsoft Fabric engines automatically write Delta with V-order, meaning data is automatically optimized for BI reporting, resulting in great query performance when using Power BI.

We are also committed to amazing start up time performance. In Microsoft Fabric, every workspace comes with a Spark ‘starter pool’ with default configurations. These pools are kept ‘live’ meaning Spark sessions now start within 5-15 seconds from the moment you run your notebook, at no additional cost.

Whilst out of the box experiences are key, we realize admins require more granular controls when managing their Spark workload. We are therefore giving admins the ability to create their own custom Spark pools where they can configure parameters like node size, number of nodes, executors and autoscale.

We are also excited to announce that Spark pools will start all the way from a single node, which is a great cost-effective option for test runs or lightweight workloads.

Admins will also be able to install public and custom libraries to the workspace pool, as well as set the default runtime and configure Spark properties. All notebooks & Spark Jobs will inherit the runtime, libraries, & settings without needing to manage things on an artifact-by-artifact basis.

Developer experience

Our goal is for every data engineer to have a delightful authoring experience, irrespective of their tooling of choice.

The primary authoring canvas offered in Synapse Data Engineering is the notebook. The notebook provides developers with native lakehouse integration, users can easily collaborate thanks to built-in co-authoring, whilst the notebook auto saves, just like in Microsoft Office. Notebooks can be scheduled or added to pipelines for more complex workflows.

Data engineers who want to make use of ad hoc libraries during their session, can install popular Python and R libraries in-line leveraging commands like pip install. Notebooks can also reference each other for more modularized ways of working.

Users who on the other hand prefer low-code experiences can also leverage Data Wrangler, a UI data prep experience built on top of pandas dataframes. Low code operations are automatically translated to code for transparency and reusability.

Notebooks provide fully integrated Spark monitoring experiences inside the notebook cells. The built-in Spark advisor, analyzes Spark executions, and provides users with real-time advice and guidance.

Users can also navigate to the full-blown monitoring hub where they can monitor all current & past Spark jobs, in addition to other Fabric items. They can drill down into job details, view associated notebooks & pipelines, explore notebook snapshots, and navigate to the Spark UI & history server.

We know many developers prefer working in IDEs and so we are also thrilled to announce native VS Code integration with Fabric code artifacts. The Synapse VS Code extension enables users to work with their notebooks, Spark Jobs and lakehouses straight from VS Code. Users can benefit from full debugging support whilst using the Spark clusters in their workspace.

Finally, users who prefer to work in their own environment can leverage the Spark Job Definition (SJD) in Microsoft Fabric. Using the SJD, users can upload their existing JAR files, tweak Spark configurations, add lakehouse reference and submit their jobs. Just like notebooks, SJDs come with monitoring, scheduling and pipeline integration.

Watch the following video to learn more about the Data Engineering experience in Fabric.

Coming Soon

In addition to what is shipping at Microsoft Build, we also have a whole variety of capabilities that are releasing in the coming months. Stay tuned for our monthly blog updates, where we will be keeping you posted on what is newly available. Here are the top 10 things to look forward to:

- Lakehouse sharing: End users who want to use the lakehouse for reporting or data science, will be able to easily discover all the lakehouses they have been given access to inside the OneLake Data Hub, the Microsoft Fabric data discovery portal.

- Lakehouse security: With ‘One Security’, table and folder security are applied once inside the lakehouse and is automatically kept in sync across all engines and even external services. This ensures that your data is protected at all times, in a consistent and reliable way.

- Spark Autotune: Autotune uses machine learning to analyze previous Spark job runs and tunes the configurations to automatically optimize performance for users.

- High Concurrency Mode: Customers will be able to share their notebook sessions, further improving start up times for notebooks attached to existing sessions (as well as reducing costs).

- Custom live pools: Users will be able to keep their custom pools ‘live’ meaning these will also be able to benefit from the fast start up times (like starter pools).

- Environments: To give users more flexibility when managing their Spark workload, they will be able to configure an ‘environment’. Inside they can select their Spark pool, default runtime and install libraries. Environments can be attached to notebooks and Spark jobs, overriding the default.

- Copilot integration: Notebooks will come equipped with data-aware copilot capabilities. Users will be able to use magic commands to generate explanations and code. Code shortcuts will help with the common tasks such as bug fixes and documentation.

- VSCode.dev: In addition to the current VS Code integration, users will also be able to work with VS Code in a fully remote mode, with code automatically syncing back to the service.

- CI/CD integration: Users will be able to commit all their data engineering artifacts to a git repo and leverage deployment pipelines for deploying items between dev, test and prod.

- Microsoft Fabric SDK: Users will be able to work with Data engineering items programmatically thanks to APIs and the Fabric SDK. We will also support the Livy endpoint for programmatic batch job submission.

Get started with Microsoft Fabric

Microsoft Fabric is currently in preview. Try out everything Fabric has to offer by signing up for the free trial—no credit card information required. Everyone who signs up gets a fixed Fabric trial capacity, which may be used for any feature or capability from integrating data to using Spark in notebooks. Existing Power BI Premium customers can simply turn on Fabric through the Power BI admin portal. After July 1, 2023, Fabric will be enabled for all Power BI tenants.

Sign up for the free trial. For more information read the Fabric trial docs.

Other resources

If you want to learn more about Microsoft Fabric, consider:

-

- Signing up for the Microsoft Fabric free trial

- Visiting the Microsoft Fabric website

- Reading the more in-depth Fabric experience announcement blogs:

- Data Factory experience in Fabric blog

- Synapse Data Science experience in Fabric blog

- Synapse Data Warehousing experience in Fabric blog

- Synapse Real-Time Analytics experience in Fabric blog

- Power BI announcement blog

- Data Activator experience in Fabric blog

- Administration and governance in Fabric blog

- OneLake in Fabric blog

- Microsoft 365 data integration in Fabric blog

- Dataverse and Microsoft Fabric integration blog

- Exploring the Fabric technical documentation

- Reading the free e-book on getting started with Fabric

- Exploring Fabric learn modules

- Exploring Fabric through the Guided Tour

- Watching the free Fabric webinar series

- Joining the Fabric community to post your questions, share your feedback, and learn from others

- Visiting Microsoft Fabric Ideas to submit suggestions for improvements and vote on your peers’ ideas

Learning resources

To help you get started with Microsoft Fabric, there are several resources we recommend:

-

- Microsoft Fabric Learning Paths: experience a high-level tour of Microsoft Fabric and how to get started

- Microsoft Fabric Tutorials: get detailed tutorials with a step-by-step guide on how to create an end-to-end solution in Microsoft Fabric. These tutorials focus on a few different common patterns including a lakehouse architecture, data warehouse architecture, real-time analytics, and data science projects.

- Microsoft Fabric Documentation: read Fabric docs to see detailed documentation for all aspects of Microsoft Fabric.

Join the conversation

Want to learn more about Microsoft Fabric from the people who created it? Join us on May 24th at 9 AM PST for a two-day live event to see Microsoft Fabric in action. These sessions will be available on-demand after May 25th. Join the live event or see the full list of sessions.